Arize AI alternatives: Top 5 Arize competitors compared (2026)

Bram P

Jan 30, 2026

TL;DR: Which Arize alternative fits your needs?

LangWatch wins for teams shipping production LLM applications with complex AI agents. Agent simulation testing, collaboration with PM's, OpenTelemetry-native tracing, and collaborative evaluation workflows make it the most complete platform for agentic AI.

Runner-up alternatives:

Langfuse - Open-source flexibility, for small start-ups / dev-focussed teams

Fiddler AI - Enterprise ML and LLM monitoring, but lacks agent simulation

LangSmith - Great if you're locked into LangChain

Helicone - Observability - Proxy LLM calls via their platform

Choose LangWatch if agent simulation (multi-turn conversations), automated prompt optimization, and cross-functional collaboration matter. Pick others if you only need basic logging or have specific constraints (e.g., LangChain-only, simple tracing).

Why teams look for Arize AI alternatives

Arize AI started as an ML model monitoring platform focused on traditional machine learning operations. The company built features for drift detection, model performance tracking, and tabular data monitoring designed for predictive ML models from years past.

As LLM applications moved from prototypes to production, Arize expanded into generative AI through its Arize Phoenix open-source framework and later added LLM capabilities to its web application. Arize now offers LLM evaluation, prompt versioning through their Prompt Hub, and tracing for conversational applications.

However, teams building production LLM applications with AI agents encounter specific workflow gaps:

ML-first architecture. Arize's platform was built for traditional ML workflows. LLM evaluation, prompt management, and generative AI tracing were added later, creating a disconnected experience between classical model monitoring and agentic AI development.

No agent simulation testing. Arize focuses on observing what happened after the fact. Teams need to test complex multi-turn agent conversations, tool usage patterns, and edge cases before deployment through synthetic simulations.

Limited prompt optimization capabilities. Manual prompt engineering doesn't scale for complex agent systems. Teams need systematic, automated approaches to improve prompts using techniques like DSPy.

Evaluation and tracing live in separate workflows. Dataset management exists separately from the tracing interface. Correlating evaluation results to specific production traces requires manual work.

Teams shipping LLM applications with AI agents need platforms that integrate simulation testing, automated optimization, evaluation, and observability into a single system.

Top 5 Arize alternatives (2026)

1. LangWatch: End-to-end LLM observability and agent testing platform

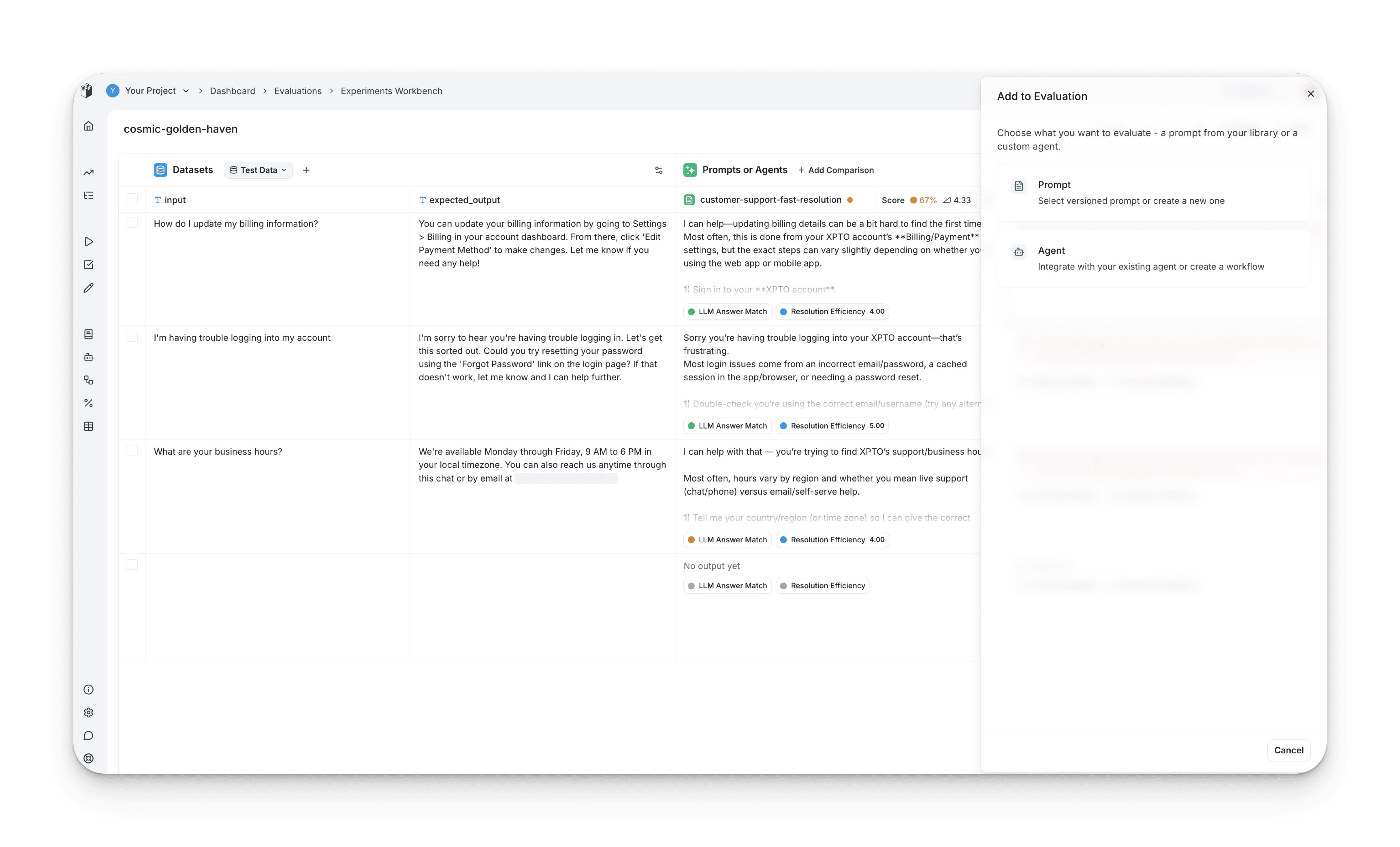

LangWatch takes an evaluation-first approach combined with unique agent simulation capabilities. Teams can test AI agents with thousands of synthetic conversations across scenarios, languages, and edge cases before production deployment, catching issues that traditional evaluation misses.

LangWatch consolidates agent testing, experimentation, evaluation, optimization, and observability into a single system. Teams use LangWatch instead of juggling multiple tools. Simulations, traces, evaluations, and optimization experiments stay in one place, with engineers, domain experts, and product managers collaborating together.

Pros

Agent simulation testing

Simulate thousands of multi-turn conversations with AI agents testing scenarios before production

Test complex agentic workflows including tool usage, reasoning chains, and multi-modal interactions

Validate edge cases and adversarial scenarios in controlled environments

Framework-agnostic simulation through AgentAdapter interface supporting any agent architecture

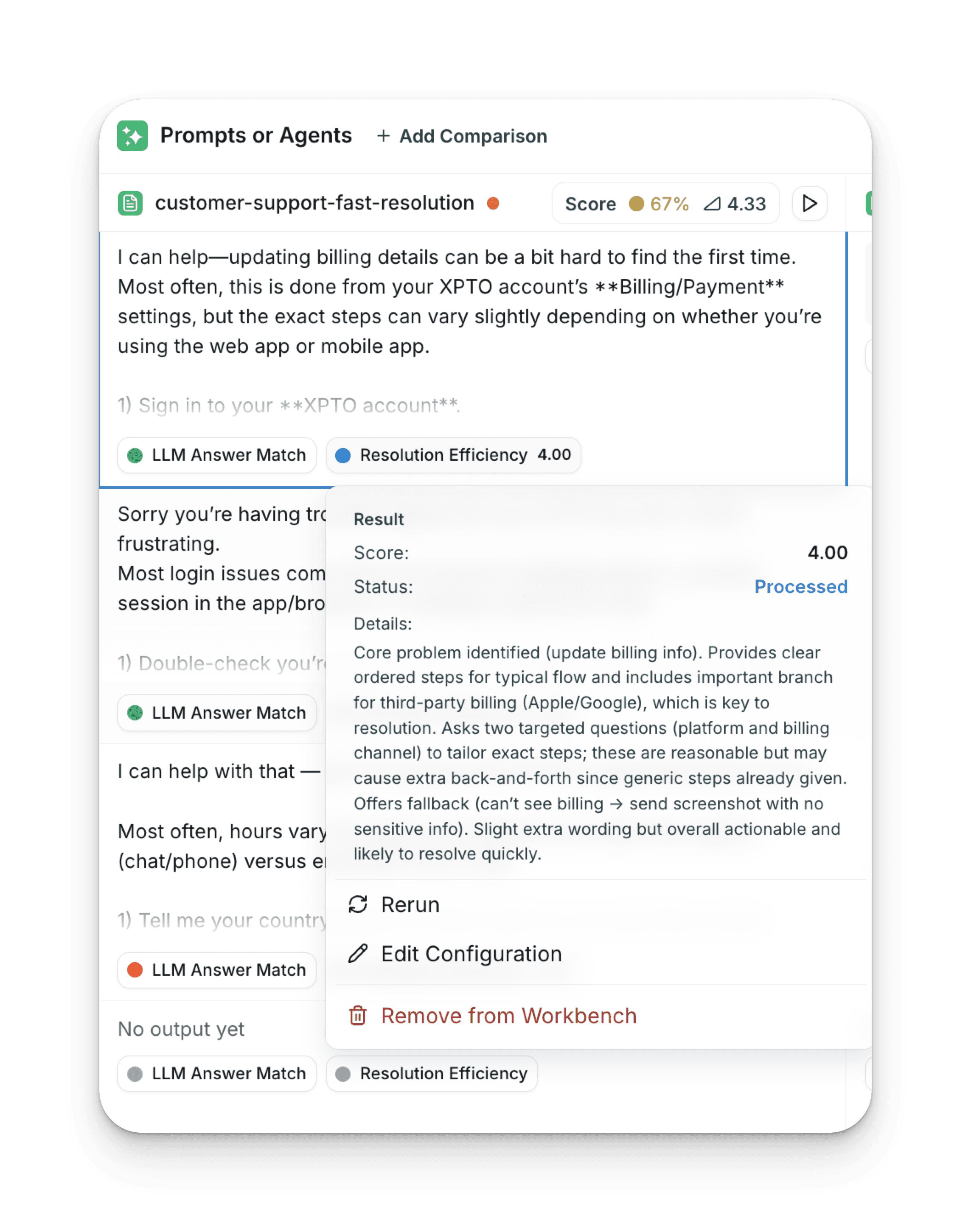

Systematic evaluation framework

Pre-built evaluators tested for accuracy including hallucination detection and safety checks

Auto-generated custom LLM-as-judge evaluators created by typing descriptions

Rigorous regression detection: Failed experiments automatically become part of the regression suite that runs in CI/CD, providing measurable metrics to teams

CLHF (Continuous Learning with Human Feedback) auto-tunes evaluators with few-shot examples

Versioned datasets: Datasets built from production logs or curated test sets track performance over time for consistent evaluation across changes

Run evaluations in real-time on production traces or offline on datasets

Collaborate on the platform or run via your CI/CD

OpenTelemetry-native tracing

Built on OpenTelemetry standard for framework-agnostic integration

Automatic instrumentation for LangGraph, DSPy, LangChain, Pydantic AI, and more

Support for 800+ models and providers including OpenAI, Anthropic, Bedrock, Gemini

Zero vendor lock-in with standardized tracing

Collaborative UI & CI/CD runs

Dual interface: platform UI for domain experts, programmatic APIs for developers

Data review and labeling with collaborative annotation workflows

Convert production traces into reusable test cases and golden datasets

Custom dashboards for business stakeholders with user behavior analytics

Deployment flexibility

Self-hosting with unlimited features

Cloud-hosted option with generous free tier

Hybrid deployment keeping sensitive data on-premises

Enterprise support with air-gapped installations available

Cons

Your team needs to be ready for setting quality standards, think about what's the goal and understand what quality looks like for your company/product.

Best for

Companies building production AI agents and complex agentic systems that need comprehensive testing, automated optimization, and cross-functional collaboration. Larger teams or organisations fit very well.

Pricing

Free tier for developers exploring evaluation workflows. Growth plan starts at €29/user/month with unlimited experiments and simulations. Enterprise plans with custom deployments including air-gapped modes.

Why teams choose LangWatch over Arize

Feature | LangWatch | Arize | Winner |

|---|---|---|---|

Agent simulation testing | ✅ Synthetic conversation testing with thousands of scenarios | ❌ No simulation capabilities | LangWatch |

Automated prompt optimization | ✅ DSPy integration with MIPROv2 and systematic optimization | ❌ Manual prompt engineering only | LangWatch |

LLM evaluation framework | ✅ Pre-built evaluators plus auto-generated custom ones both UI and CI/CD | ✅ Has evaluation capabilities | LangWatch |

OpenTelemetry integration | ✅ Built on OpenTelemetry standard, framework-agnostic | ❌ Proprietary instrumentation | LangWatch |

Collaborative workflows | ✅ Dual interface for domain experts and developers | ❌ Developer-focused interface | LangWatch |

Trace visualization | ✅ Built for nested agent calls and complex workflows | ✅ Built on ML monitoring foundation | Tie |

Dataset management | ✅ Automatically created from traces, integrated workflow | ❌ Available but separate from tracing | LangWatch |

Deployment model | ✅ Cloud, self-hosted (free), and hybrid options | ✅ Cloud and on-prem | LangWatch |

LangWatch's agent simulation testing and automated optimization catch issues before production while enabling cross-functional teams to collaborate effectively. Start with LangWatch's free tier →

2. Langfuse: Open-source LLM observability platform

Langfuse offers an open-source observability platform with trace logging, prompt management, and basic analytics. The open-source and self-hosting option appeals to teams with strict data policies.

Pros

Fully open-source with self-hosted deployment

Manual annotation system for marking problematic outputs

Session grouping for multi-turn conversation debugging

Active community and abundant documentation

Cost tracking across providers

Cons

No built-in eval runners or scorers - you build everything yourself

No CI/CD integration for automated testing

No collaboration with PM's, Data Scientists.

No agent simulation capabilities

No automated prompt optimization

Basic trace viewing without experiment comparison or statistical analysis

Self-hosting requires dedicated DevOps resources

Pricing

Free for open-source self-hosting. Paid cloud plan starts at $29/month with custom enterprise pricing.

Best for

Teams requiring open-source self-hosted deployment with full data control who have resources to build custom evaluation and optimization pipelines from scratch.

Read more on LangFuse <> LangWatch.

3. Fiddler AI: Enterprise ML and LLM monitoring platform

Fiddler AI extends traditional ML monitoring into LLM observability. The platform offers monitoring, explainability, and safety features for both classical ML models and generative AI applications.

Pros

Unified ML and LLM monitoring in one platform

Drift detection for embeddings and model outputs

Hallucination detection and safety guardrails

Root cause analysis for model performance issues

Alert configuration for quality thresholds

Cons

ML-first interface makes LLM-specific workflows less intuitive

No CI/CD deployment blocking

No agent simulation testing

No automated prompt optimization capabilities

No free tier for teams to test the platform

No built-in version control or A/B testing for prompts

Pricing

Custom enterprise pricing only.

Best for

Organizations already using Fiddler for traditional ML monitoring who want unified observability across both classical and generative AI models.

4. LangSmith: LangChain ecosystem observability

LangSmith is the observability platform built by the LangChain team. It traces LangChain applications automatically and provides evaluation tools designed specifically for LangChain workflows.

Pros

Zero-config tracing for LangChain and LangGraph applications

Automatic instrumentation with single environment variable

Dataset versioning and test suite management

Experiment comparison interface

LLM-as-judge evaluation for correctness and relevance

Cons

Works best within LangChain ecosystem, less flexible for other frameworks

No agent simulation testing capabilities

No automated prompt optimization framework

Costs scale linearly with request volume

Self-hosted deployment only on Enterprise plans

Deep LangChain integration creates switching costs

Pricing

Free tier with 5K traces monthly for one user. Paid plan at $39/user/month with custom enterprise pricing including self-hosting.

Best for

Teams running their entire LLM stack on LangChain or LangGraph who prioritize zero-config framework integration over flexibility and agent testing capabilities.

Arize alternative feature comparison

Feature | LangWatch | Langfuse | Fiddler AI | LangSmith |

|---|---|---|---|---|

Distributed tracing | ✅ | ✅ | ✅ | ✅ |

Agent simulation testing | ✅ Thousands of scenarios | ❌ | ❌ | ❌ |

Automated prompt optimization | ✅ DSPy integration | ❌ | ❌ | ❌ |

Evaluation framework | ✅ 20+ built-in evaluators | ✅ Build yourself | ✅ | ✅ |

CI/CD integration | ✅ | ✅ Guides | ❌ | Partial |

OpenTelemetry native | ✅ | ❌ | ❌ | ❌ |

Collaborative workflows | ✅ Dual interface | ❌ | ❌ | ❌ |

Prompt versioning | ✅ | ✅ | ❌ | ✅ |

Dataset management | ✅ Auto from traces | ✅ | ✅ | ✅ |

Self-hosting | ✅ Free unlimited | ✅ Free unlimited | ✅ | ✅ Enterprise only |

Multi-provider support | ✅ 800+ models | ✅ | ✅ | ✅ |

Experiment comparison | ✅ | ✅ | ✅ | ✅ |

Custom evaluators | ✅ Auto-generated | ✅ | ✅ | ✅ |

Cost tracking | ✅ | ✅ | ✅ | ✅ |

Free tier | Generous + unlimited self-hosting | 50K units | None | 5K traces |

Choosing the right Arize alternative

Choose LangWatch if: You need agent simulation testing, multi-turn agent testing, automated prompt optimization with DSPy, OpenTelemetry-native integration, or cross-functional collaboration between developers and domain experts.

Choose Langfuse if: Open-source self-hosting is mandatory and you have resources to build custom agent testing and optimization pipelines from scratch.

Choose Fiddler AI if: You already use Fiddler for ML monitoring and need unified observability across traditional and generative AI models.

Choose LangSmith if: Your entire stack runs on LangChain/LangGraph and deep framework integration outweighs the need for agent simulation and optimization.

Final recommendation

LangWatch covers the entire LLM development lifecycle for agentic AI, including agent simulation testing, comprehensive evaluation, and production observability. The platform uniquely enables domain experts and developers to collaborate through dual interfaces while maintaining OpenTelemetry compatibility for vendor independence.

Companies building AI agents use LangWatch to test thousands of scenarios before production, automatically optimize prompts systematically, and maintain quality through collaborative evaluation workflows. The open-source offering provides unlimited self-hosting at no cost.

Get started free or explore the documentation to see how LangWatch handles agent testing, optimization, and observability for production LLM applications.

Frequently asked questions

What is the best alternative to Arize for LLM observability?

LangWatch offers the most complete Arize alternative with agent simulation testing, collaborative evaluations, and OpenTelemetry-native tracing. Unlike Arize's ML-first architecture where LLM features were added later, LangWatch was built specifically for agentic AI workflows from day one. The platform uniquely combines simulation testing with automated optimization and comprehensive observability in one system.

Does Arize have a free tier for LLM tracing?

Arize Phoenix offers a free tier with 25K trace spans per month for one user. However, LangWatch provides a more generous free tier plus unlimited free self-hosting with no feature restrictions. LangWatch's free tier also includes agent simulation capabilities and DSPy optimization that Arize doesn't offer even in paid plans.

Do I need separate tools for LLM tracing and agent testing?

Not with modern platforms. LangWatch consolidates agent simulation testing, tracing, evaluation, prompt optimization, and dataset management in one system. Agent simulations automatically link to evaluation results and production traces, creating a complete feedback loop without tool switching.

Which LLM observability platform works with multiple AI providers?

LangWatch, Braintrust, Langfuse, LangSmith, and Fiddler AI all support multiple providers including OpenAI, Anthropic, Bedrock, and Gemini. However, LangWatch's OpenTelemetry-native architecture provides the most flexibility with support for 800+ models across any provider without vendor lock-in. Helicone only supports OpenAI.

How does LangWatch compare to Arize for agent testing?

LangWatch offers comprehensive agent simulation testing with thousands of synthetic conversations testing scenarios, tool usage, and multi-modal flows before production. Arize has no agent simulation capabilities and focuses only on observing production behavior after deployment. For teams building AI agents, LangWatch's simulation testing catches issues that traditional observability misses.

What is DSPy optimization and why does it matter?

DSPy is a systematic framework for automatically optimizing prompts using techniques like MIPROv2, ChainOfThought, and few-shot learning. Instead of manual prompt engineering, DSPy tests hundreds of variations and finds optimal combinations based on your metrics. LangWatch integrates DSPy directly into the platform, enabling teams to improve prompt performance in minutes rather than weeks. Arize has no automated optimization capabilities.

Can I self-host LangWatch for free?

Yes. LangWatch's open-source version can be self-hosted completely free with no feature restrictions using Docker Compose or Kubernetes. This includes all agent simulation, DSPy optimization, evaluation, and observability features. Arize requires an enterprise plan for self-hosting, and other platforms like Braintrust and LangSmith also restrict self-hosting to paid enterprise tiers.

Which platform is best for cross-functional collaboration?

LangWatch was designed for cross-functional teams with a dual interface approach. Domain experts from Legal, Sales, or HR can create evaluation scenarios and review results through the platform UI without coding. Developers can build complex workflows via programmatic APIs and SDKs. This enables true collaboration between technical and non-technical stakeholders on AI quality. Other platforms primarily target developers only.