How to test AI Agents with LangWatch & Mastra / Google ADK and ship them reliably

Sergio Cardenas

Jan 29, 2026

Building AI agents is no longer the hard part. Shipping them to production with confidence is.

After working as a CTO at a startup and later building AI agents for larger organizations, I repeatedly ran into the same issue: agents worked well in demos, notebooks, and early testing, but became unreliable in real-world usage. Manual testing felt reassuring at first, but quickly broke down as agents became more complex, multi-turn, and tool-driven.

This article distills the lessons learned from that experience. It focuses on how to test AI agents, how to evaluate agent behavior end-to-end, and how to create a repeatable process for shipping agents to production.

Rather than comparing agent frameworks, this post presents a framework-agnostic approach. The examples use both TypeScript and Python, but the principles apply regardless of language, framework, or model provider.

TLDR;

All code used in this article is available in the repository.

this article explains:

How to structure AI agent projects so they are production-ready

How to test AI agents end-to-end without relying on manual testing

How scenario-based testing differs from prompt-only evaluations

How to detect regressions in multi-turn agent behavior

How to ship AI agents with confidence across frameworks and languages

We’ll build two agents showing different perspectives:

One in TypeScript

One in Python

There are many excellent frameworks for building AI agents today. If you know what you need, there’s probably a framework that makes implementation easier.

This post is not a comparison between frameworks.

Instead, it focuses on:

The process of building an AI agent

How to structure projects from day one

How to test agents end-to-end

How to move beyond manual “it seems to work” validation

What’s the tech stack?

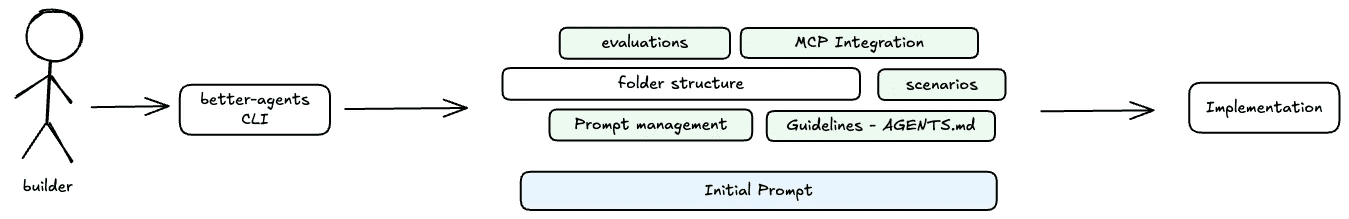

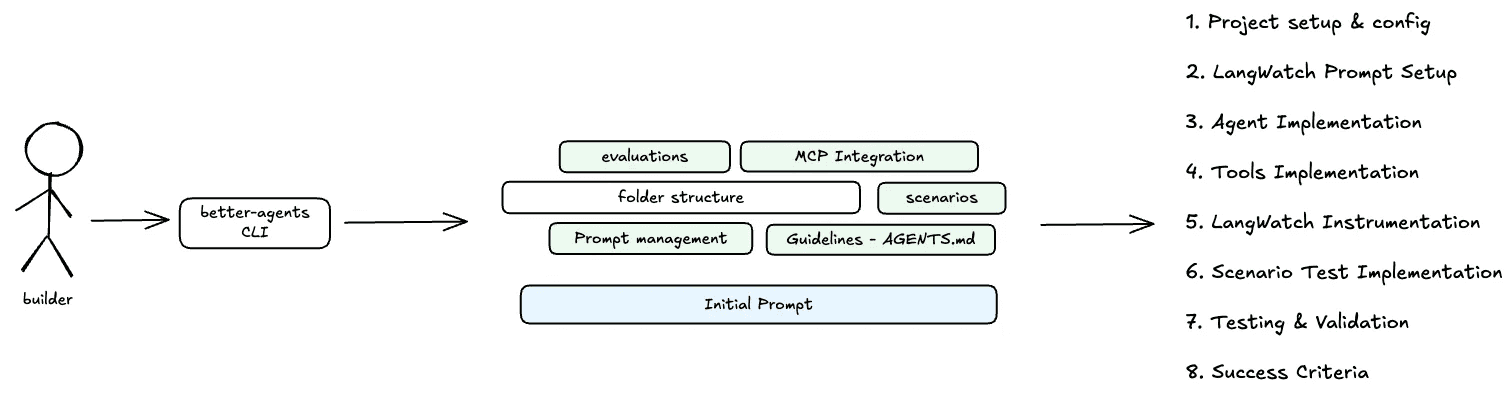

To facilitate the creation process, I'll use the CLI tool Better Agents from LangWatch.

Better Agents is a CLI Tool created by the LangWatch team. It allows you to get a set of standards and good practices for agent building right at the beginning.

Scenario-agent tests to ensure agent behavior

Prompt versioning

Evaluation notebooks to measure prompt performance

Full observability instrumentation

Standardized structure for maintainability

Additionally, for the testing process, I'm going to use Scenarios, the same tool from my previous post, also created by LangWatch.

Scenarios is a framework-agnostic tool for testing agents that allows you to:

Simulate real users in different scenarios and edge cases

Evaluate at any point in the conversation with multi-turn control

Integrate with any evaluation framework or custom evals

Simple integration by implementing only a

call()methodAvailable in Python, TypeScript, and Go

Additional note

Feel free to try different frameworks available: Vercel AI SDK, Agno, Google ADK, and LangGraph. And at the same time, the variety of LLM providers like OpenAI, Anthropic, Gemini, Bedrock, OpenRouter, and Grok.

Without further details, let's move on.

Scope

As usual, I'm going to use the supply chain in the fresh produce industry as the base use case. We'll define a couple of light requirements that will allow us to establish the foundation on which the agent will be generated.

Functional requirements

Users can ask natural-language questions about current prices by fruit.

Users can compare prices across varieties and markets.

Non-functional requirements

Latency targets: Search results and comparison queries in under 2s.

Availability: Prioritize high availability for read queries.

Scalability: System should automatically scale without user-visible degradation.

Durability: System should have no data loss for committed records; periodic backups with verified restore procedures.

Security and access control: Protect API keys and source credentials; enforce authorization access; encrypt data in transit (TLS) and at rest; minimal PII collection.

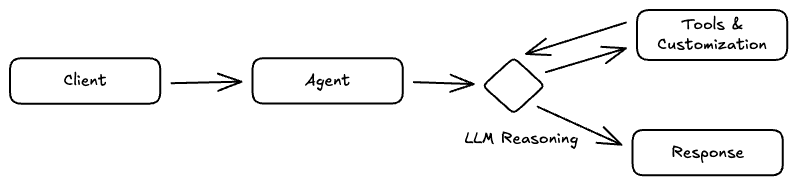

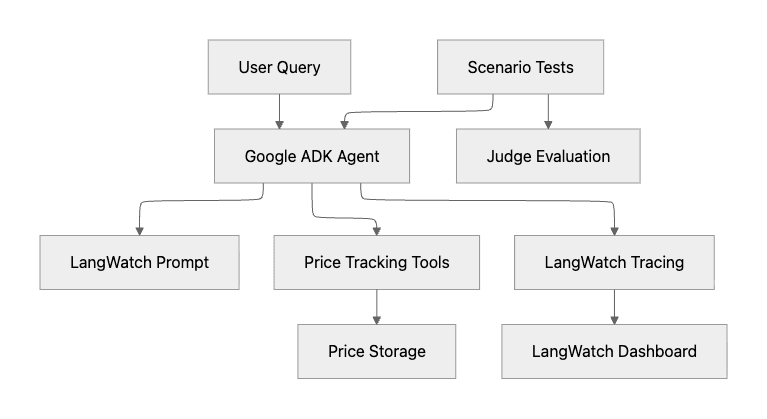

The following high-level diagram shows how the agent should operate with extended capabilities.

Since we'll be focused on implementation and testing, it's essential to anticipate what this post does NOT cover.

Below the line

Data ingestion and normalization, where the system should periodically fetch price data from multiple sources, deduplicate, and standardize units and currencies.

The actual implementation of the available Tools that the agent can operate will not be implemented; they will be mocked instead.

Let's move on.

What about the frameworks?

We already know the requirements, but do we have clarity on the language we're going to program in?

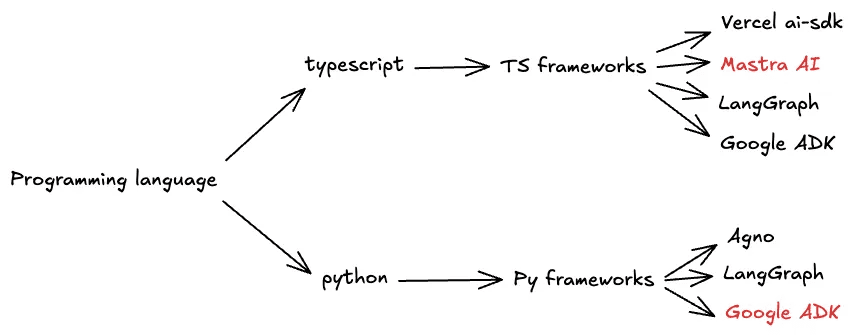

Both Python and TypeScript provide a robust ecosystem, integrate well with pre-existing services, and are highly compatible with cloud providers.

Choosing a framework should facilitate the non-functional requirements and/or give us a set of minimum capabilities to achieve them.

For TypeScript, I'll use Mastra AI, and for Python, I'll use Google ADK, frameworks I've been using more regularly lately.

The following diagram shows the flow for choosing a framework.

Let's keep this flow in mind, because we'll notice that Better Agents has automated this process for us.

Implementation

Before we begin, I'll use Amazon Bedrock for the Mastra project and Gemini for Google ADK. It will come in handy to have our API keys ready.

That said, let's start with the setup of our Better Agents projects.

06-aws-bedrock-mastra

07-gemini-adk

Setting up a Better Agents Project

Lets start by creating an agent

We can now start choosing options accordingly, the CLI will guide us through selecting o programming language, agent framework, coding assistant, LLM provider, and API keys.

When asked "What is your preferred coding assistant for building the agent?" I've chosen None, this allows me to have a degree of control over the project creation and make adjustments to the planning if necessary.

We can repeat this process for the Google ADK project.

What’s the output?

Reviewing both projects, we can see that we've been given a project that comes with the foundation and guidelines to operate according to good practices for agent creation.

This is the file structure.

Additionally, the initial prompt that we'll use in our code assistant to generate the project.

The following diagram shows the result of the project initialization.

Let's move on.

Planning in Cursor

Before starting to build the agent, we'll use the initial prompt we've been given to plan in Cursor.

For both projects, we get a plan of how the agent will be structured.

Additionally, we can see a diagram of how Cursor views the agent's structure.

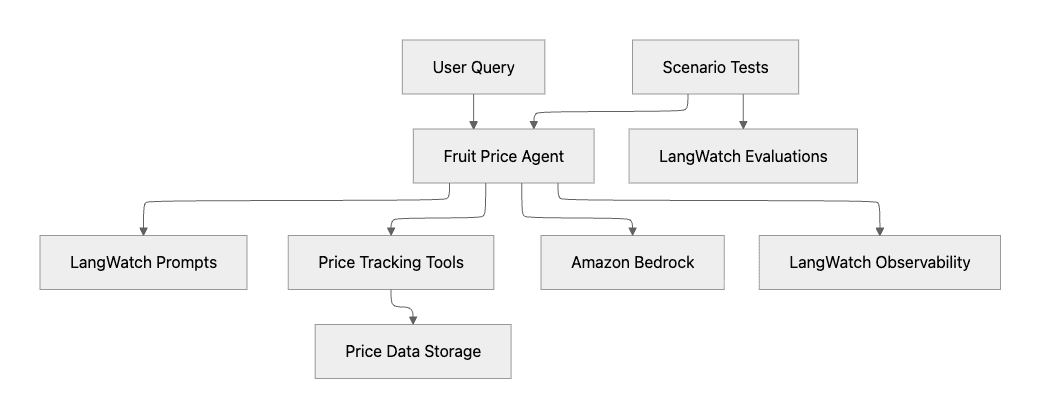

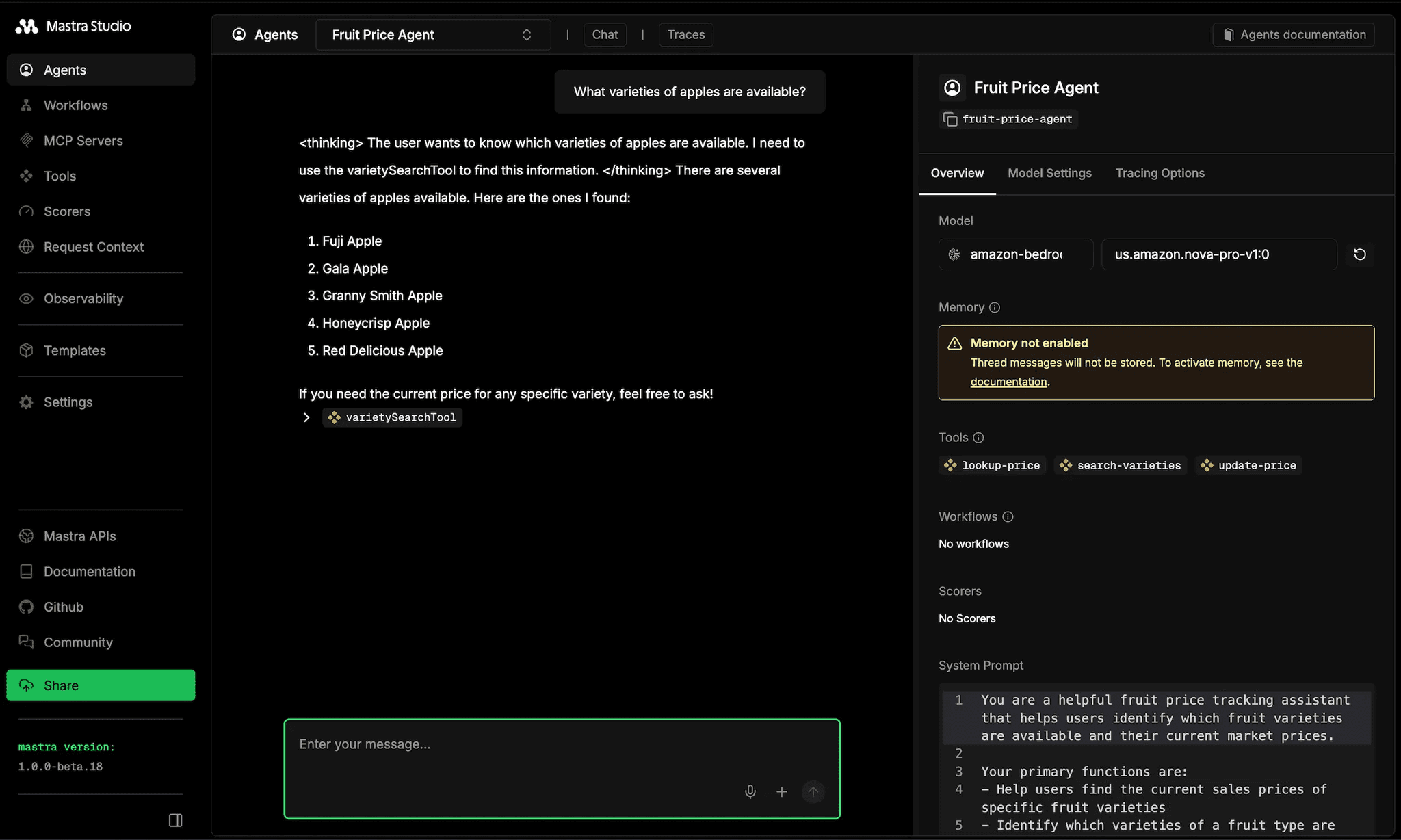

The following diagram shows the agent structure for the TypeScript project with Mastra and Amazon Bedrock.

And the respective version in Python with Google ADK and Google Gemini.

Observations

In both cases, the use of Prompt management, Tools, Observability, and Scenarios testing is planned. That's great.

In the Google ADK project, it's assumed we'll use Gemini as the LLM Provider, and it's not part of the diagram.

Let's add some spicy sauce

To add a bit of variety to both projects, I'm going to make the following modifications in both the mcp.json and AGENTS.md.

In the TypeScript project, I'll specify the use of the beta version of Mastra AI and the Amazon Nova Pro model from Amazon Bedrock.

In the Python project, I'll specify the use of the Gemini 2.5 Flash model from Google.

With these changes, Cursor will give us an updated implementation plan. In summary, we can update our previous diagram with the provided information.

Lets go building!

Building

As soon as we start building, we can interact to refine any details that come up.

The process is quite straightforward, however, we may need to intervene to make corrections. In those cases, my recommendations are:

Have read the documentation to understand how each component is implemented.

Stress out the usage of MCPs (included)

How does the agent look like?

Here are some interesting things.

We can see that it has implemented prompt management. That was nice. As well as the Agent is already in place.

Alrighty, we can verify the same in Python.

Quick test in the Mastra Studio if it is working. Probably you can tweak a bit here and there but I am not expecting a major change.

There is the thing, it is quite normal to test the agent manually. Human testing and Human evaluation. We are the one who decides, and that is not scalable.

That’s where Scenarios comes in.

Testing

In my previous post I show a bit of how I found out that I can even test my recently created Agent End to End.

Definition: Scenario-Based Testing for AI Agents

Scenario-based testing simulates real user interactions with an AI agent and evaluates behavior, decisions, and outcomes across multiple conversation turns, rather than testing isolated prompts.

Scenario-Based Testing for AI Agents

Here’s a interesting scenario testing, I can easily define a LLM as a judge to verify the scenario that I am interested in.

In the same way, I can check if my tools were called. Here is how the scenario looks like.

And its results.

What about extended functionality?

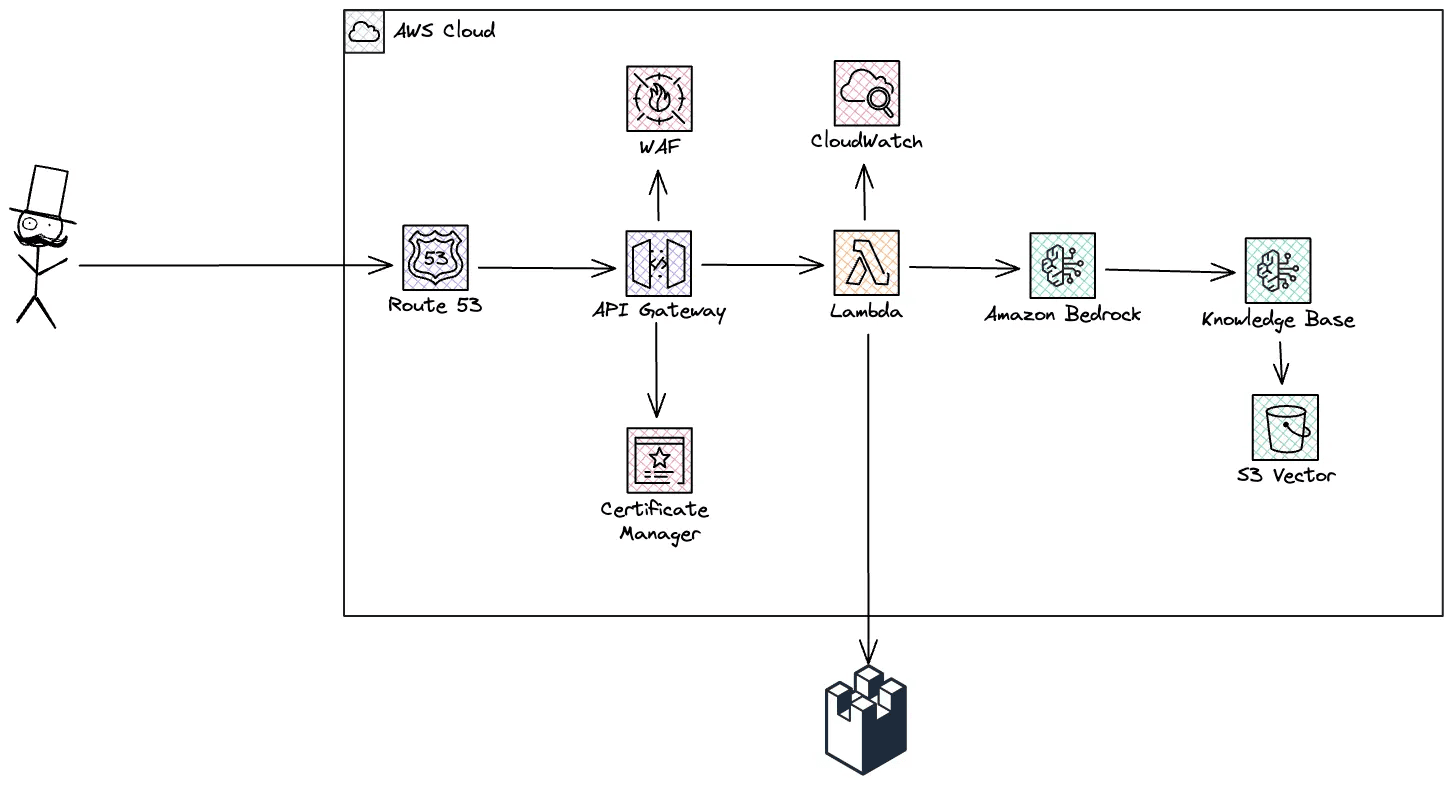

Since the post is already long, I thought about giving a hint on the next step of implementation.

We can easily extend what we just created and deploy it in our environment of choice. In this case, I'll do it in AWS.

LangWatch support

The CLI tool handles the repetitive setup, letting you focus on the actual agent logic rather than boilerplate configuration.

The real value comes from Scenarios. Testing agents manually doesn't scale, and having a framework-agnostic tool that simulates real user interactions makes a significant difference. You can define criteria, verify tool calls, and run multi-turn conversations programmatically.

Conclusion: A repeatable way to Ship AI Agents with confidence

The hardest part of building AI agents is not choosing a framework or model. It is knowing whether the agent will behave correctly once it reaches production.

Manual testing does not scale. Prompt-level evaluations are insufficient. AI agents must be tested end-to-end, across multiple turns, with explicit validation of behavior and tool usage.

Scenario-based testing provides a practical solution. By simulating real users, verifying intermediate decisions, and evaluating outcomes programmatically, teams can detect regressions early and ship agents with confidence.

Once requirements are clear and testing is in place, framework choice becomes a secondary concern. The combination of structured project setup and automated agent testing creates a predictable path from prototype to production.

AI Summary

AI agents require different testing strategies than traditional software

Manual testing and prompt-only evaluations do not scale

Scenario-based testing validates multi-turn behavior and tool usage

Framework choice matters less than testing and observability

Production-ready agents require automated, repeatable evaluations