Closing the year Strong: December Product Updates

Manouk Draisma

Dec 24, 2025

As we wrap up the year, the LangWatch team wants to wish you happy holidays and a healthy, successful 2026.

Here’s to a year of agents that actually perform in production reliably, safely, and with confidence.

Over the past few months, we’ve increasingly heard the same thing from teams across industries: LLMOps is becoming a core pillar of 2026 roadmaps. If that’s true for your organization as well, feel free to reach out. Our team is happy to support you with input, examples, and structure for building a strong internal business case.

To close out the year, we shipped a set of meaningful product improvements. Not incremental tweaks, but changes that make LangWatch easier to use, easier to scale, and more powerful for teams running real-world agent systems.

Below is a quick overview of what’s new.

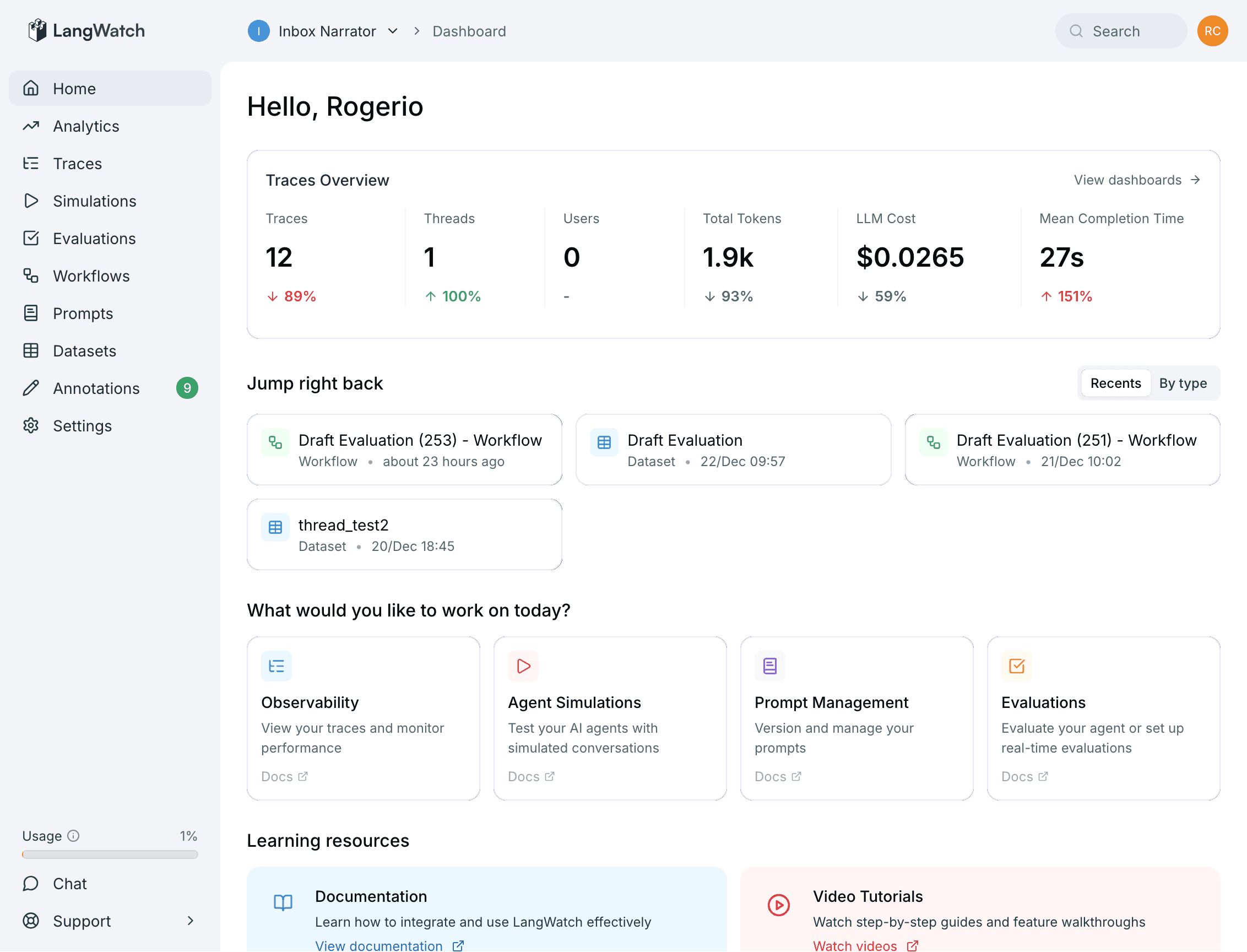

A redesigned dashboard and UI

We’re ending the year with a big one: LangWatch has a completely new dashboard and visual design.

This isn’t a light refresh or cosmetic polish. We rethought how the product should feel when you’re actually using it day to day —especially when you’re debugging, evaluating, or investigating issues in production.

Evaluations, traces, and signals are now front and center, with:

Clearer structure and hierarchy

Better information density without overwhelming the screen

Far less visual friction when analyzing results

The goal was simple: reduce the time between something looks off and I understand exactly why.

If you haven’t logged in recently, this is a great moment to take another look.

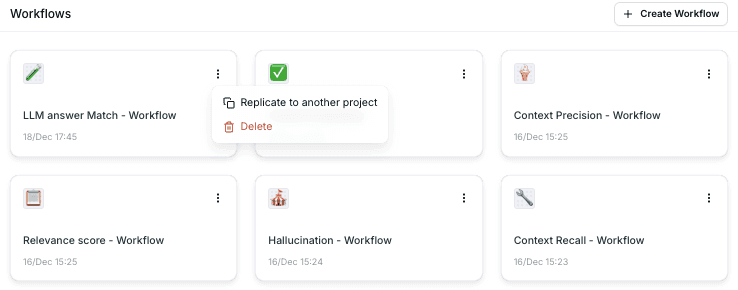

Replicate workflows, prompts, evaluations, and datasets across projects

If you’re running multiple LangWatch projects — for example, separate environments for development, staging, and production this update was built specifically for you.

You can now replicate workflows, prompts, evaluations, and datasets across projects, giving you far more control over how you manage real-world setups:

Maintain full isolation and access control between dev, staging, and prod

Reuse the exact same evaluation logic across environments

Replicate only what you need, when you need it

Iterate and sync without duplicating effort or losing consistency

This makes LangWatch significantly easier to operate at scale, especially for teams running multiple agents or pipelines in parallel.

Analytics updates for more advanced monitoring

Analytics has also received a series of improvements aimed at more advanced organization and monitoring workflows.

You can now:

Create multiple dashboard pages per project

Separate analytics by use case, agent, evaluation, or environment.Reorder and manage dashboard pages

Keep the most relevant insights easily accessible.Group traces by labels within an evaluation

Enable consistent metric comparisons across categories or scenarios.Attach alerts to analytics graphs

Trigger notifications when metrics cross thresholds or regress against a baseline.

Together, these updates make it easier to move from passive observability to proactive monitoring — and to catch issues before they impact users.

Upcoming webinar: testing agentic AI the right way

On 13 January, we’re hosting a technical webinar titled:

“LLM evals aren’t enough: Testing agentic AI the right way.”

Rogerio will be joined by Ron Kremer (PhD AI, ADC Data & AI) for a deep, practical discussion on how enterprise teams should think about evaluation and testing in the age of agentic systems.

If you’re building or deploying agents beyond simple single-step prompts, this one’s worth attending.

Latest content from LangWatch

If you missed some of our recent content, here are a few highlights to close out the year:

More deep dives, hands-on guides, and agent testing content are coming early in 2026.

Thanks for building with LangWatch this year. We’re excited about what’s ahead — and we’re looking forward to helping you ship better, safer, and more reliable agents in the year to come.

Happy holidays,

The LangWatch Team